LLM Open Connector + MuleSoft + Cerebras

Learn how to implement Salesforce's LLM Open Connector with MuleSoft Anypoint Platform using its AI Chain Connector and Inference Connector.

This recipe implements an example of Cerebras Inference; however, the high-level process is the same for all models and providers.

The steps in this recipe use Anypoint Studio. For instructions using Anypoint Code Builder (ACB), see LLM Open Connector + MuleSoft + Ollama.

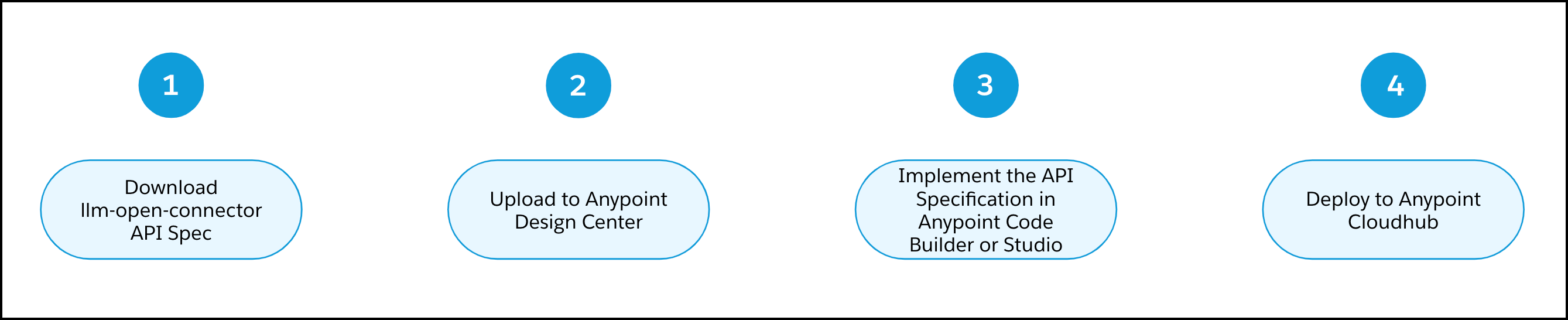

High-level Process

There are four high-level steps for connecting your MuleSoft model endpoint to the LLM Open Connector.

Prerequisites + Tutorial Video

Before you begin, review the prerequisites:

- You have a MuleSoft Account (Sign up here).

- You have Anypoint Code Builder (ACB) or Anypoint Studio installed. The instructions in this recipe are based on Anypoint Studio.

- You have Inference Connector installed.

- You have a Cerebras account with an API key.

View a step-by-step tutorial video that covers an implementation similar to the one covered in this recipe:

Step 1: Download the API Specification for the LLM Open Connector

-

Download LLM Open Connector API Spec.

-

Rename the file from

llm-open-connector.ymltollm-open-connector.yaml.

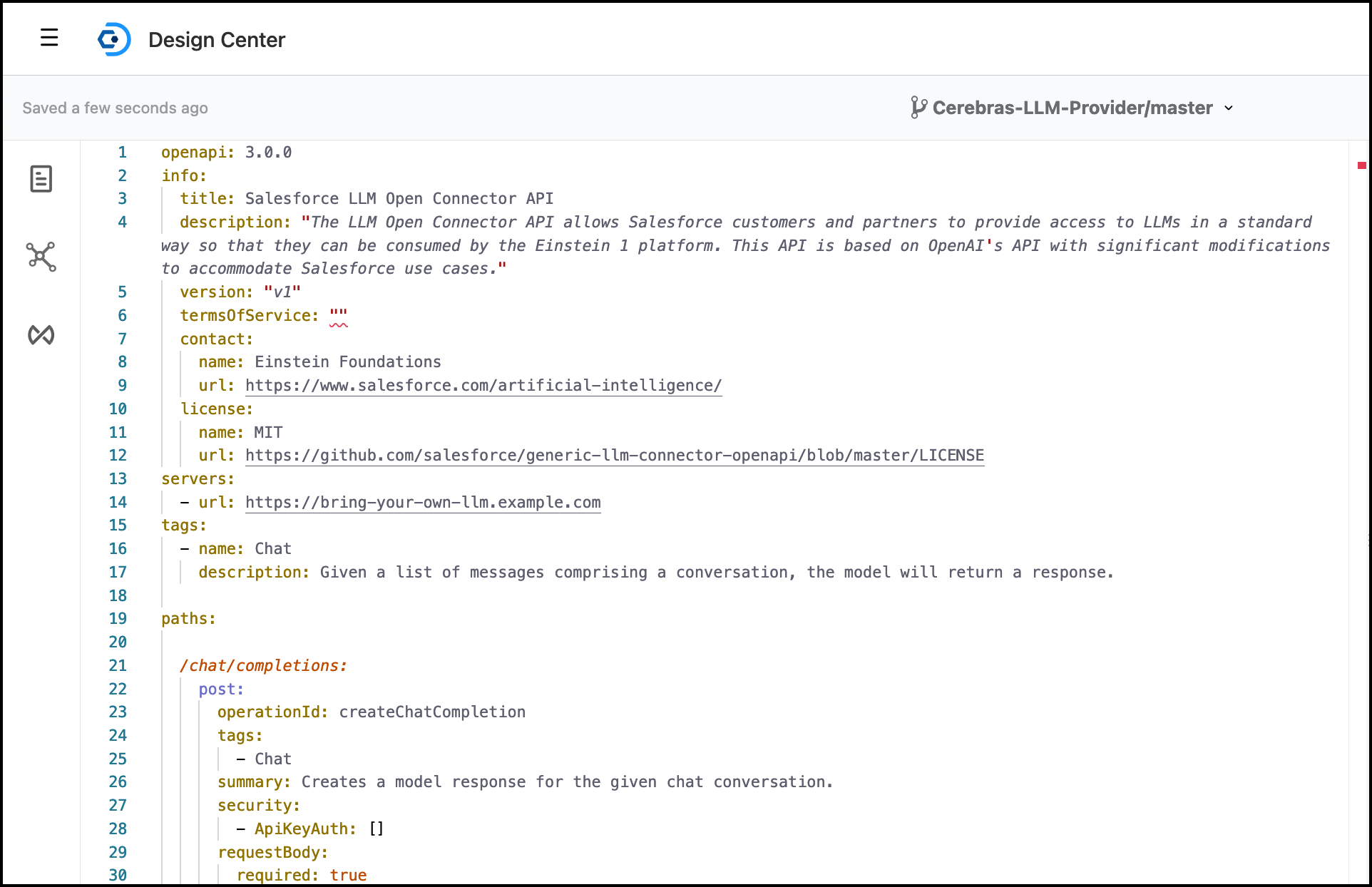

Step 2: Import the API Specification into Anypoint Design Center

-

Log in to your MuleSoft account.

-

Go to Anypoint Design Center.

-

Import a new API specification from a file using these values:

- Project Name:

Cerebras-LLM-Provider, - API Specification: Select

REST API, - File upload:

llm-open-connector.yaml. Be sure to use the renamed file.

- Project Name:

-

Click Import.

-

Verify that the API specification successfully imported.

-

Change

termsOfService: ""totermsOfService: "-". -

Remove

servers:and the exampleurl.

servers:

- url: https://bring-your-own-llm.example.com

-

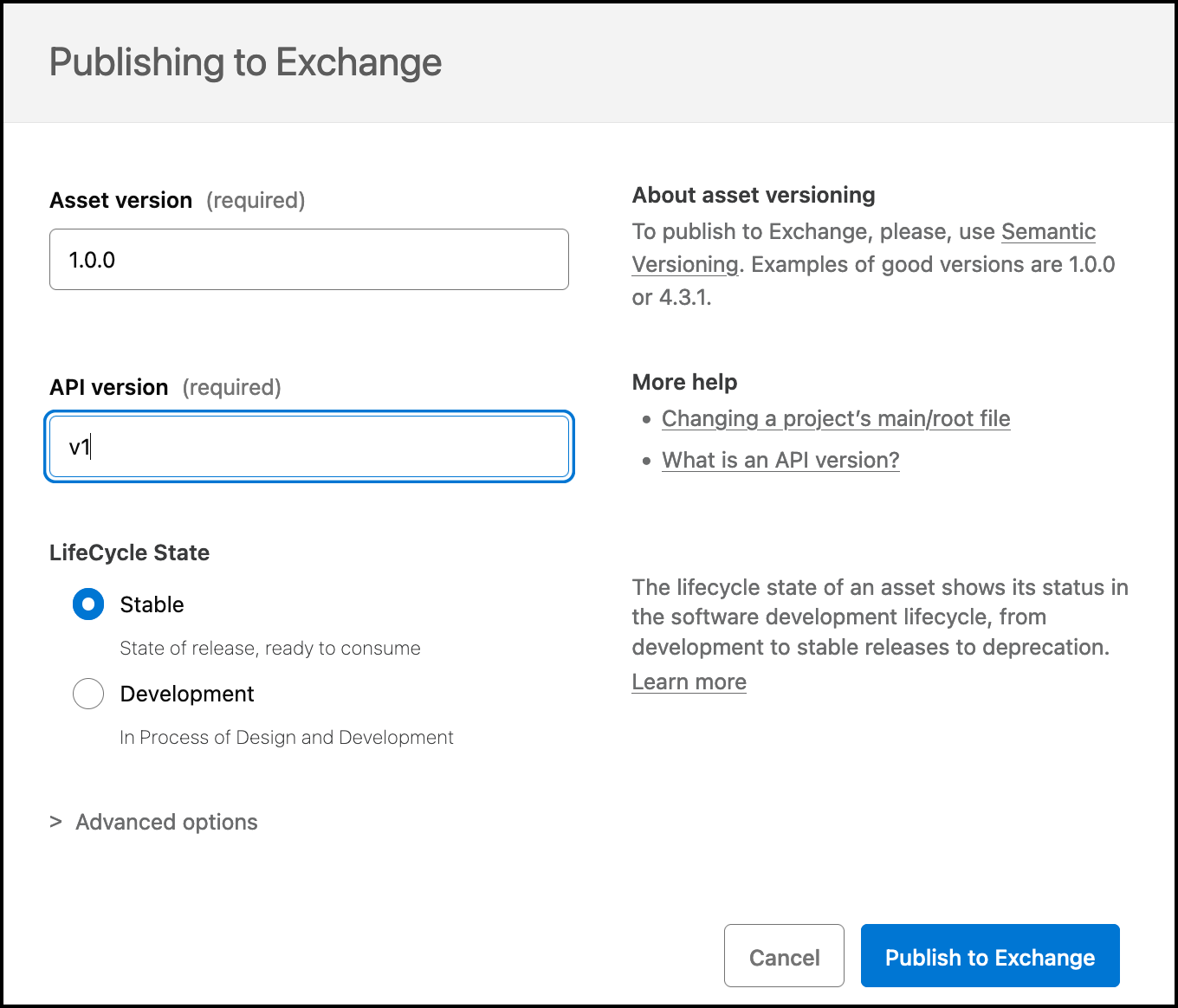

Click Publish.

-

Provide versioning information:

- Asset version:

1.0.0 - API version:

v1 - Lifecycle State:

Stable

- Asset version:

- Click Publish to Exchange.

Step 3: Implement the API Specification

This cookbook uses Anypoint Studio to implement the API Specification. If you prefer, you can also implement the spec with Anypoint Code Builder.

Import API Specification into Studio

-

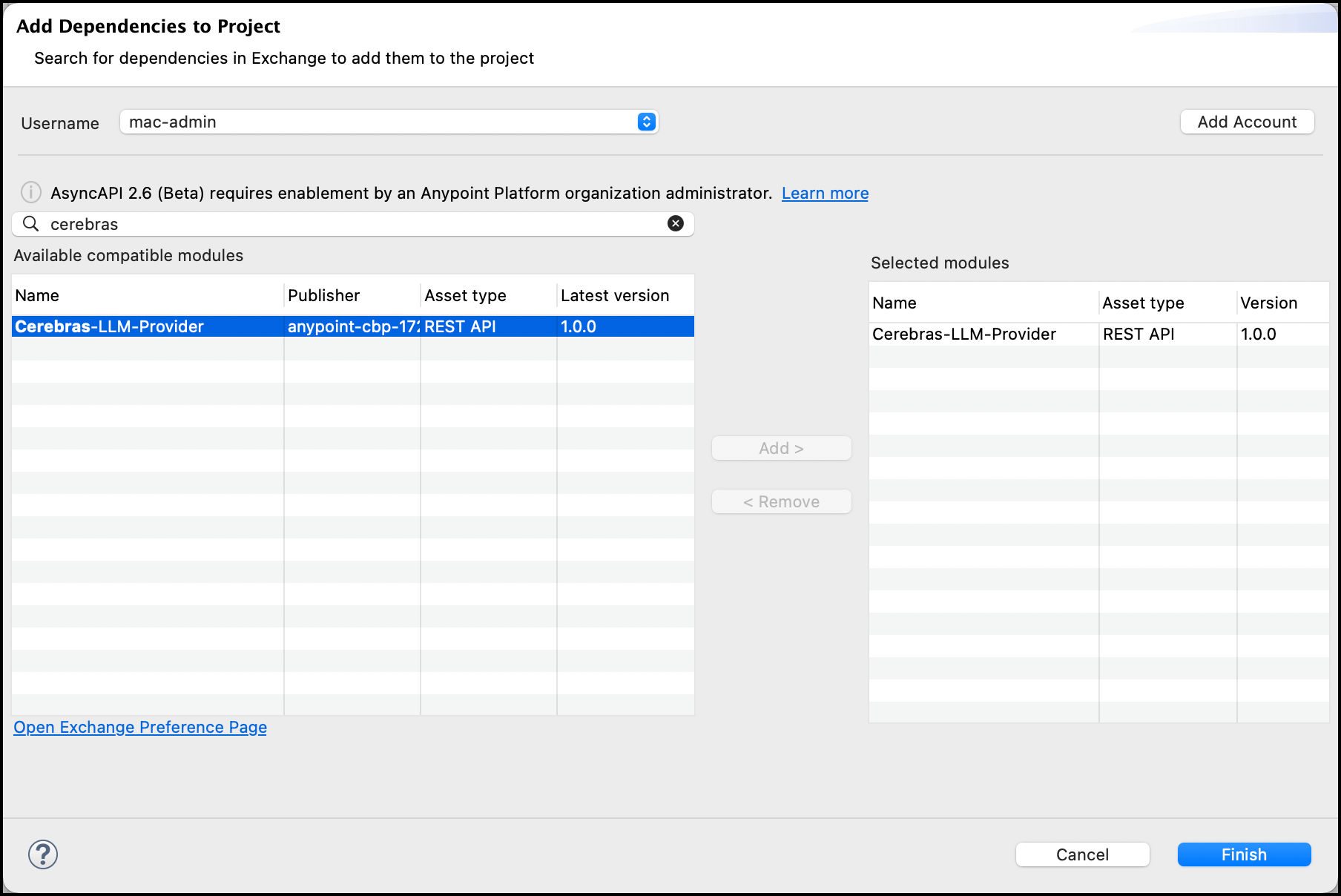

Open Anypoint Studio and create a Mule Project.

-

Name the project and import an API spec:

- Project Name:

cerebras-llm-provider, - Import a published API: Select the

Cerebras-LLM-ProviderAPI spec from the previous step.

- Project Name:

- Click Finish.

Add the Inference Connector to Your Project

- If you have not installed the Inference Connector, install it before you start.

- Add the Inference Connector dependency to the Mule Project.

<dependency>

<groupId>com.mulesoft.connectors</groupId>

<artifactId>mac-inference-chain</artifactId>

<version>0.1.0</version>

<classifier>mule-plugin</classifier>

</dependency>

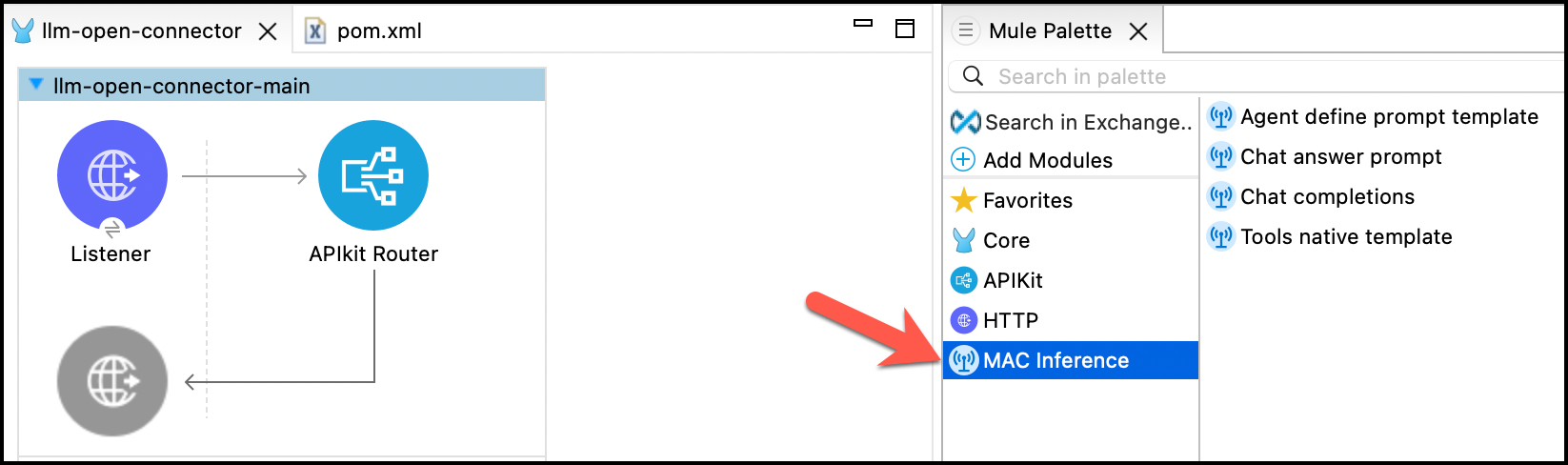

- Make sure the Inference Connector is present in the Mule Palette.

Implement the Chat Completions Endpoint

-

Go to the scaffolded flow

post:\chat\completions:application\json:llm-open-connector-config. -

Drag and drop

Chat completionsoperation from the Inference Connector into the Flow. -

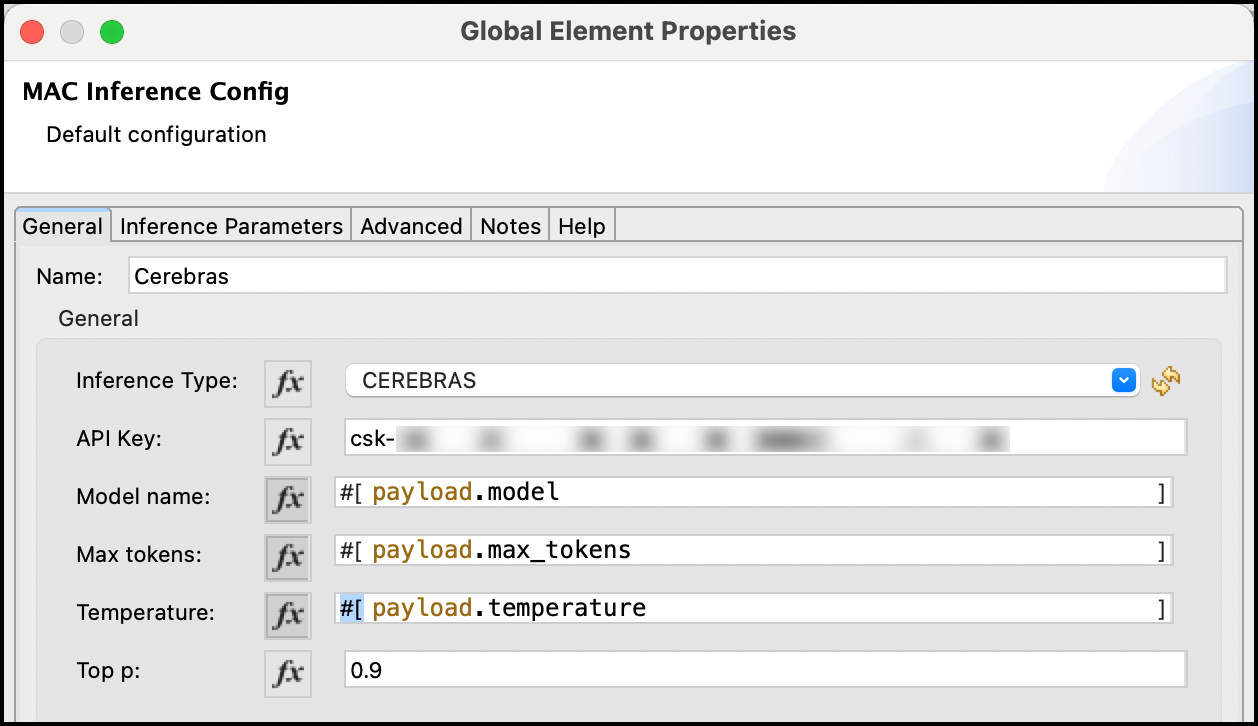

Provide the Inference connector configuration for Cerebras.

-

Parametrize all properties needed by the LLM Open Connector API Spec.

.

. -

In the

Chat completionsoperation, enter:payload.messages. -

Before the

Chat completionsoperation, add theSet Variableoperation with the namemodeland enter in the expression valuepayload.model. -

After the

Chat completionsoperation, add theTransform Messageoperation and provide the mapping in this code block:

%dw 2.0

output application/json

---

{

id: "chatcmpl-" ++ now() as Number as String,

created: now() as Number,

usage: {

completion_tokens: attributes.tokenUsage.outputCount,

prompt_tokens: attributes.tokenUsage.inputCount,

total_tokens: attributes.tokenUsage.totalCount

},

model: vars.model,

choices: [

{

finish_reason: "stop",

index: 0,

message: {

content: payload.response default "",

role: "assistant"

}

}

],

object: "chat.completion"

}

- Save the project.

Test Locally

-

Start the Mule Application.

-

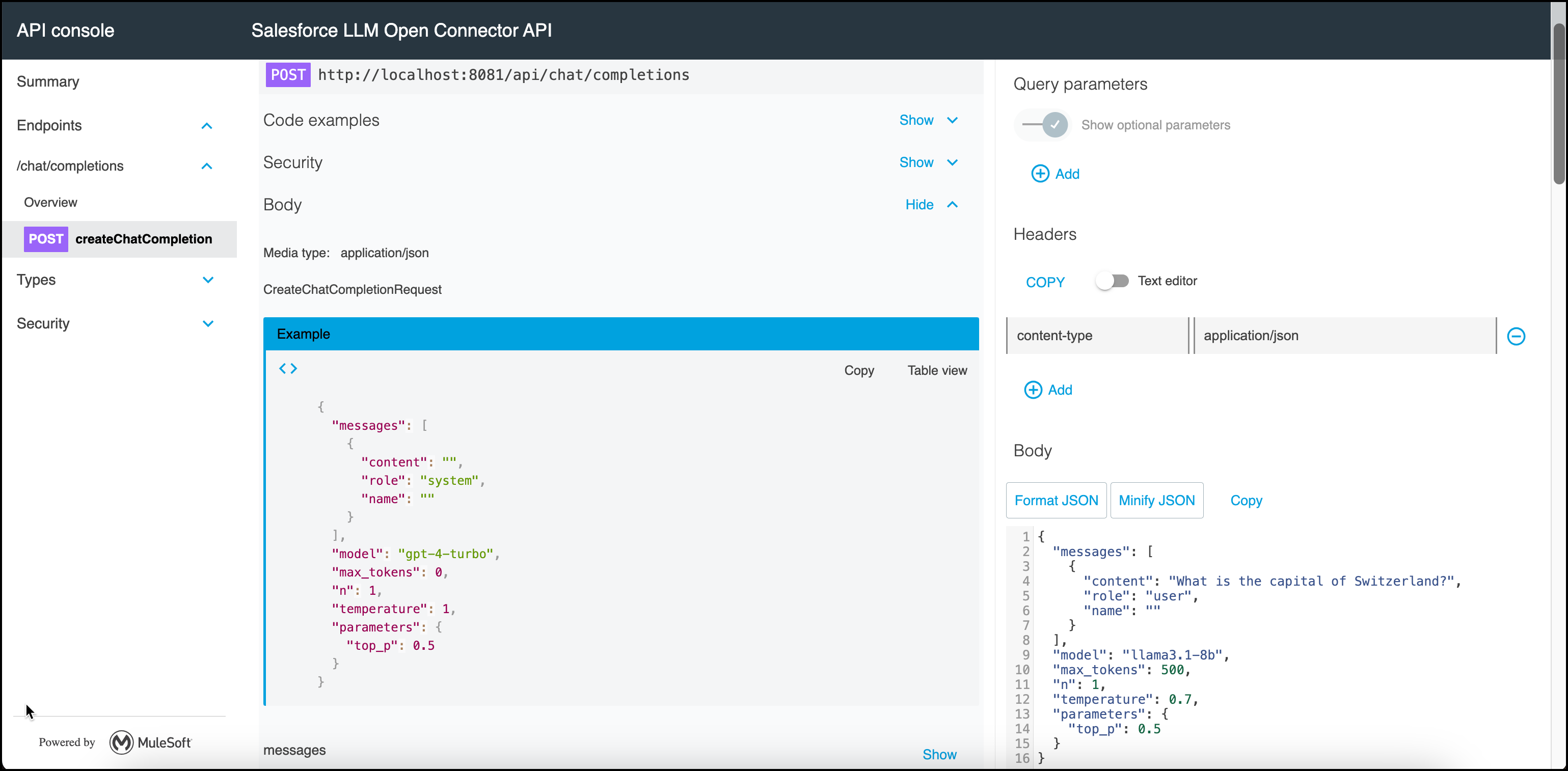

Go to the API Console.

-

Enter the API Key and following payload:

{

"messages": [

{

"content": "What is the capital of Switzerland?",

"role": "user",

"name": ""

}

],

"model": "llama3.1-8b",

"max_tokens": 500,

"n": 1,

"temperature": 0.7,

"parameters": {

"top_p": 0.5

}

}

- Validate the result. Make sure the values are mapped correctly for token usage.

{

"id": "chatcmpl-1732373228",

"created": 1732373228,

"usage": {

"completion_tokens": 8,

"prompt_tokens": 42,

"total_tokens": 50

},

"model": "llama3.1-8b",

"choices": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"content": "The capital of Switzerland is Bern.",

"role": "assistant"

}

}

],

"object": "chat.completion"

}

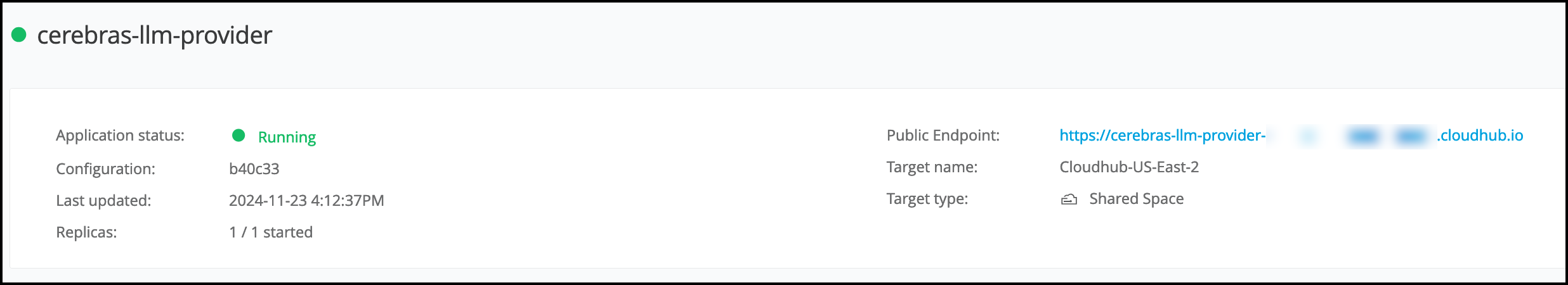

Step 4. Deploy to Anypoint CloudHub

-

After the application is tested successfully, deploy it to Anypoint CloudHub.

-

Right-click your Mule Project and navigate to

Anypoint Platform>Deploy to CloudHub. -

Choose the environment you want to deploy to.

-

Enter the required values:

- App name:

cerebras-llm-provider - Deployment target:

Shared Space (Cloudhub 2.0) - Replica Count:

1 - Replica Size:

Micro (0.1 vCore)

- App name:

-

Click Deploy Application.

-

Wait until the application is deployed.

If you receive the error [The asset is invalid, Error while trying to set type: app. Expected type is: rest-api.], go to Exchange and delete or rename the asset. This error is a known issue.

Create a Configuration in Model Builder

After your API is running on CloudHub, you need to add the model endpoint to Model Builder.

-

In Salesforce, open Data Cloud.

-

Navigate to Einstein Studio.

-

Click Add Foundation Model, and click Connect to your LLM.

-

Click Next.

-

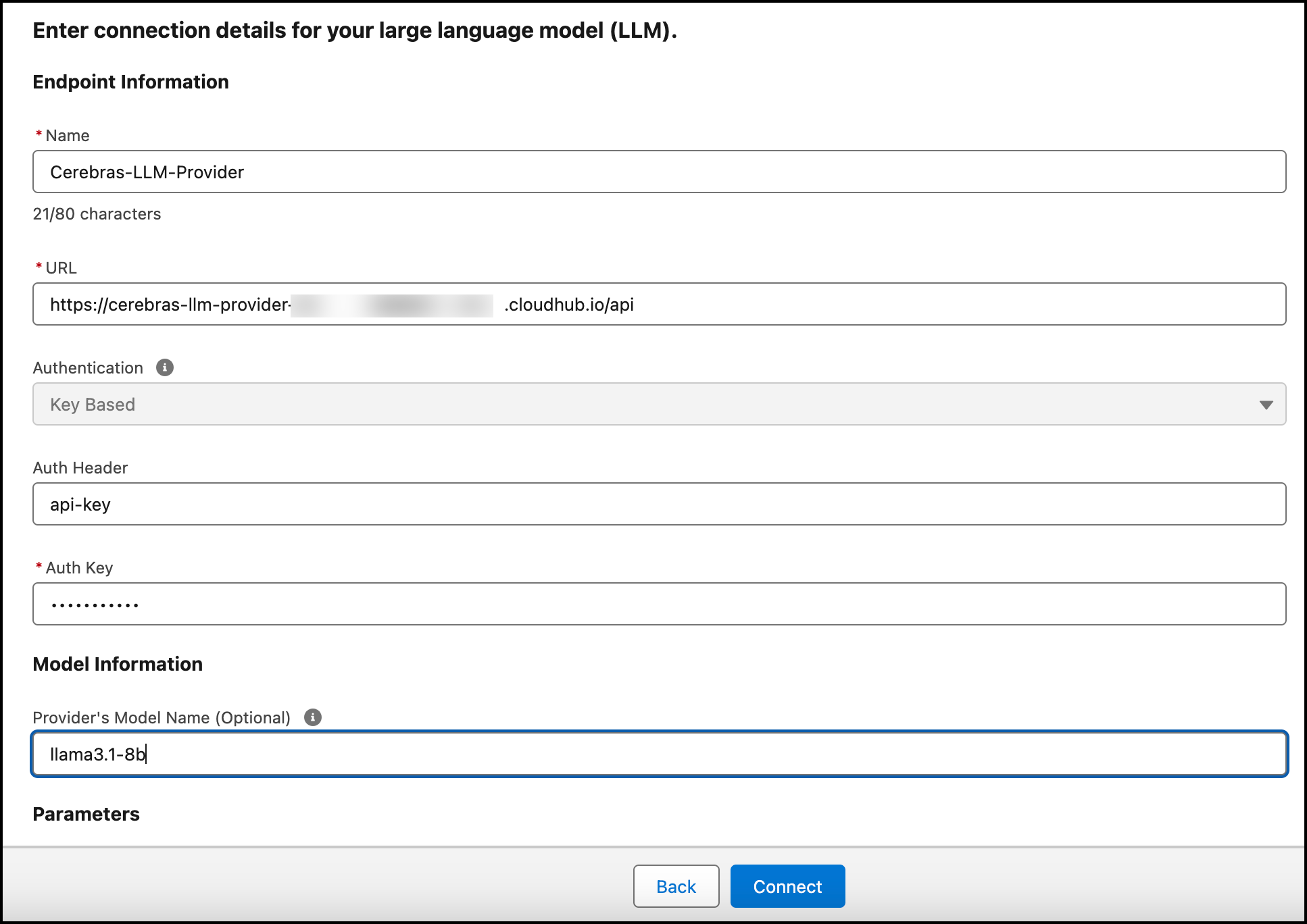

Enter the required values:

- Name:

Cerebras-LLM-Provider - URL:

<cloudhub_url>/api - Model: A model name is required. For this recipe, choose between

llama3.1-70borllama3.1-8b.

- Name:

-

Click Connect.

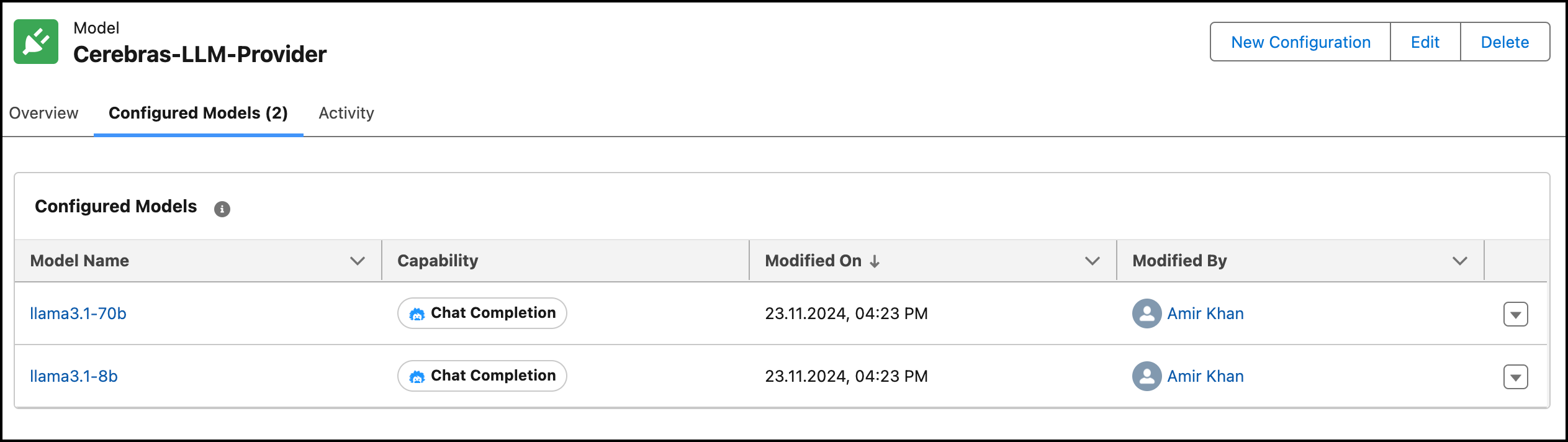

-

Create two configurations for each supported model:

llama3.1-70bllama3.1-8b

Important Considerations

- This cookbook uses Cerebras models

llama3.1-70bandllama3.1-8b. - When configuring in Model Builder, you need to provide a default value for the model. In this recipe the model name is parametrized, so a value is required.

- The API is deployed under the governance of the MuleSoft Anypoint Platform. As a result:

- You can monitor the application by viewing logs and errors.

- You can apply additional security through Anypoint's API management capabilities.

- You can deploy multiple replicas to scale horizontally and vertically.

Conclusion

This cookbook demonstrates how to set up an LLM Open Connector using MuleSoft for the Chat Completion endpoints of Cerebras. This recipe is a sandbox implementation, and it's not production ready. For production use, please optimize your implementation based on your specific requirements and expected usage patterns.