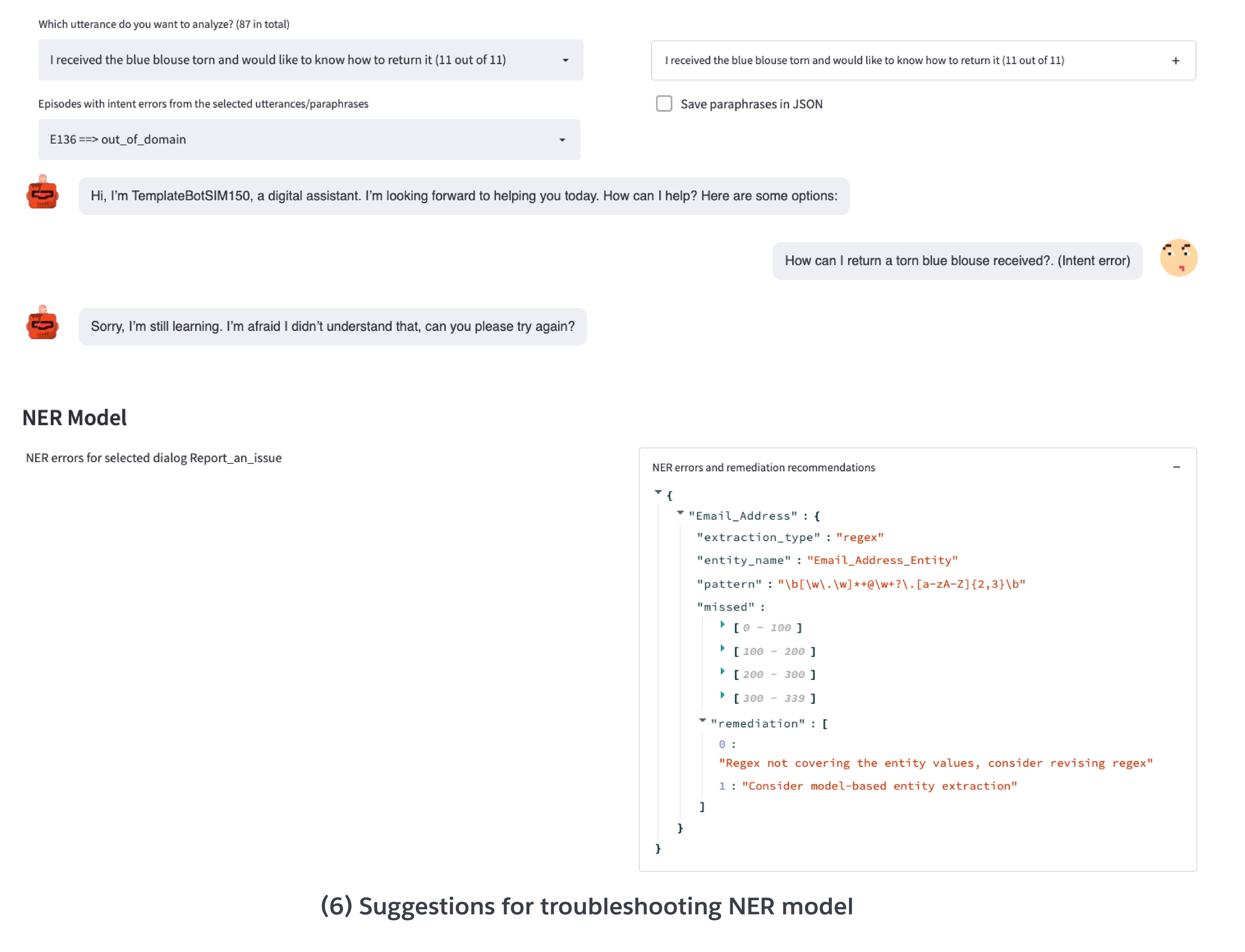

Apply Intent Model Remediation Suggestions¶

The most straightforward approach of applying remediation suggestions is to augment the the recommended misclassified paraphrases to the original training set to retrain the intent model.

For the Einstein BotBuilder platform, new intent sets can be created as a csv file for the augmented training set. The csv file can be deployed

to users’ org via Salesforce Workbench. The new intent model can be trained by associate the

new intent set report_issue_dev_augmented with the Report an Issue intent.

MIDomainName |

MIIntentName |

Utterance |

|---|---|---|

TemplateBotSIM_intent_sets |

report_issue_dev_augmented |

The detergent powder was not properly packaged and the powder is all over my food. It wasn’t properly packed. |

TemplateBotSIM_intent_sets |

report_issue_dev_augmented |

I would like to complain about my problem. What should I do? |

TemplateBotSIM_intent_sets |

report_issue_dev_augmented |

I want to reset my account password for my bank credit card. I forgot it. |

For DialogFlow CX, the recommended paraphrases can be add back to the corresponding training set and the intent model will be automatically retrained.

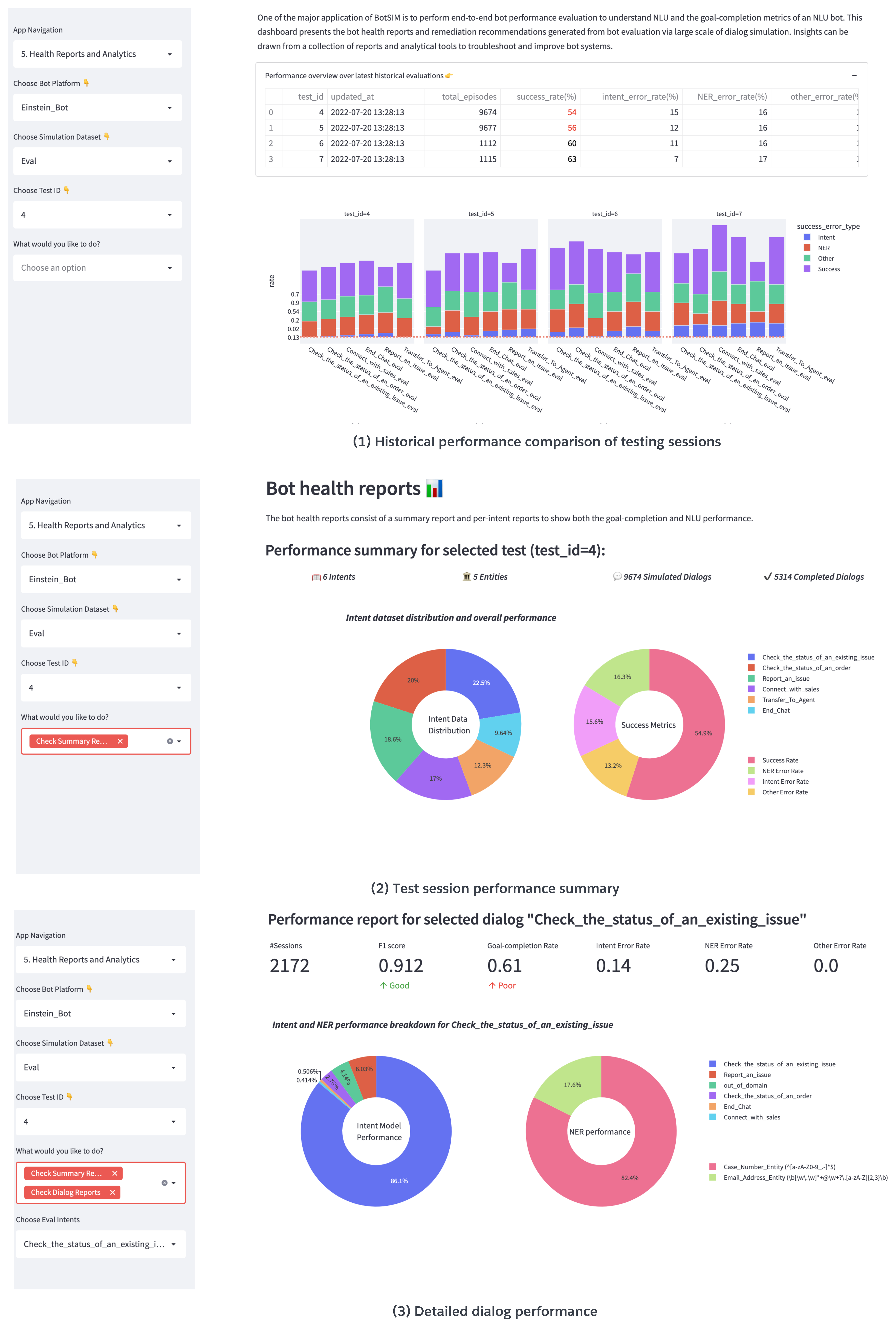

The table below shows the intent F1 score comparison before and after intent model retraining based on the simulation goals created from the same evaluation set.

Model |

Transfer to Agent |

End Chat |

Connect with sales |

Check Issue Status |

Check Order Status |

Report an Issue |

|---|---|---|---|---|---|---|

Baseline |

0.92 |

0.95 |

0.89 |

0.93 |

0.94 |

0.82 |

Retrained |

0.92 |

0.97 |

0.93 |

0.95 |

0.96 |

0.87 |

We observe consistent improvements for all intents on the evaluation set after model retraining. More challenging intents (lower F1s), e.g., “Report an Issue (RI)” and “Connect with Sales (CS)”, saw larger performance gains compared to the easier ones such as “End Chat (EC)” (higher F1s). This demonstrates the efficacy of BotSIM and is likely due to more paraphrases being selected for retraining the model on the more challenging intents.

No. Training Utterances |

Transfer to Agent |

End Chat |

Connect with sales |

Check Issue Status |

Check Order Status |

Report an Issue |

|---|---|---|---|---|---|---|

Original |

150 |

150 |

150 |

150 |

150 |

150 |

Augmented |

255 |

184 |

212 |

268 |

215 |

294 |